Ph.D. in Computer Science @ The Ohio State University

Hi! I am a second year PhD student majoring in Computer Science at The Ohio State University, working with Prof. Srinivasan Parthasathy and Prof. Eric Fosler-Lussier. Over the summer of 2025, I interned at Adobe Research mentored by Dr. Sayan Nag.

I completed my Masters in Computer Science at University of Pennsylvania, where I was grateful to be guided by Prof. Mark Yatskar. In the past, I have also had the opportunity to work with Prof. Ranjay Krishna and Prof. Maneesh Agrawala. I did my B.Tech in Computer Science from Veermata Jijabai Technological Institute (VJTI). My current research interests lie at the intersection of vision and language, which have been the focus of my past and current research experiences.

As increasingly powerful deep learning models in vision and language emerge, fundamental

questions like “What do the models actually learn?” and “What does the model base its predictions

on?” still persist. My long-term research goal is to build a robust and reliable model that would

be able to answer these questions. I wish to explore these two directions for it:

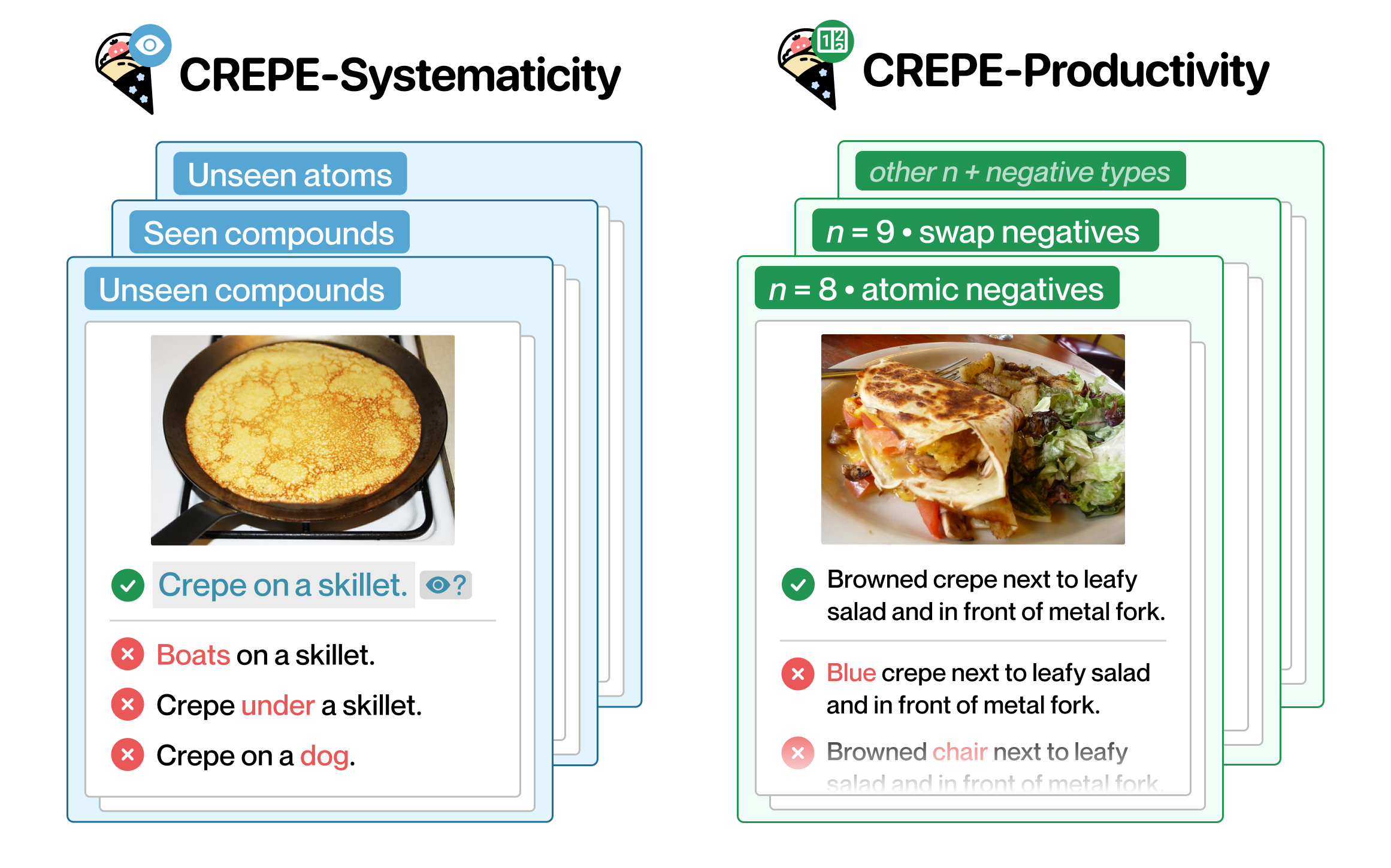

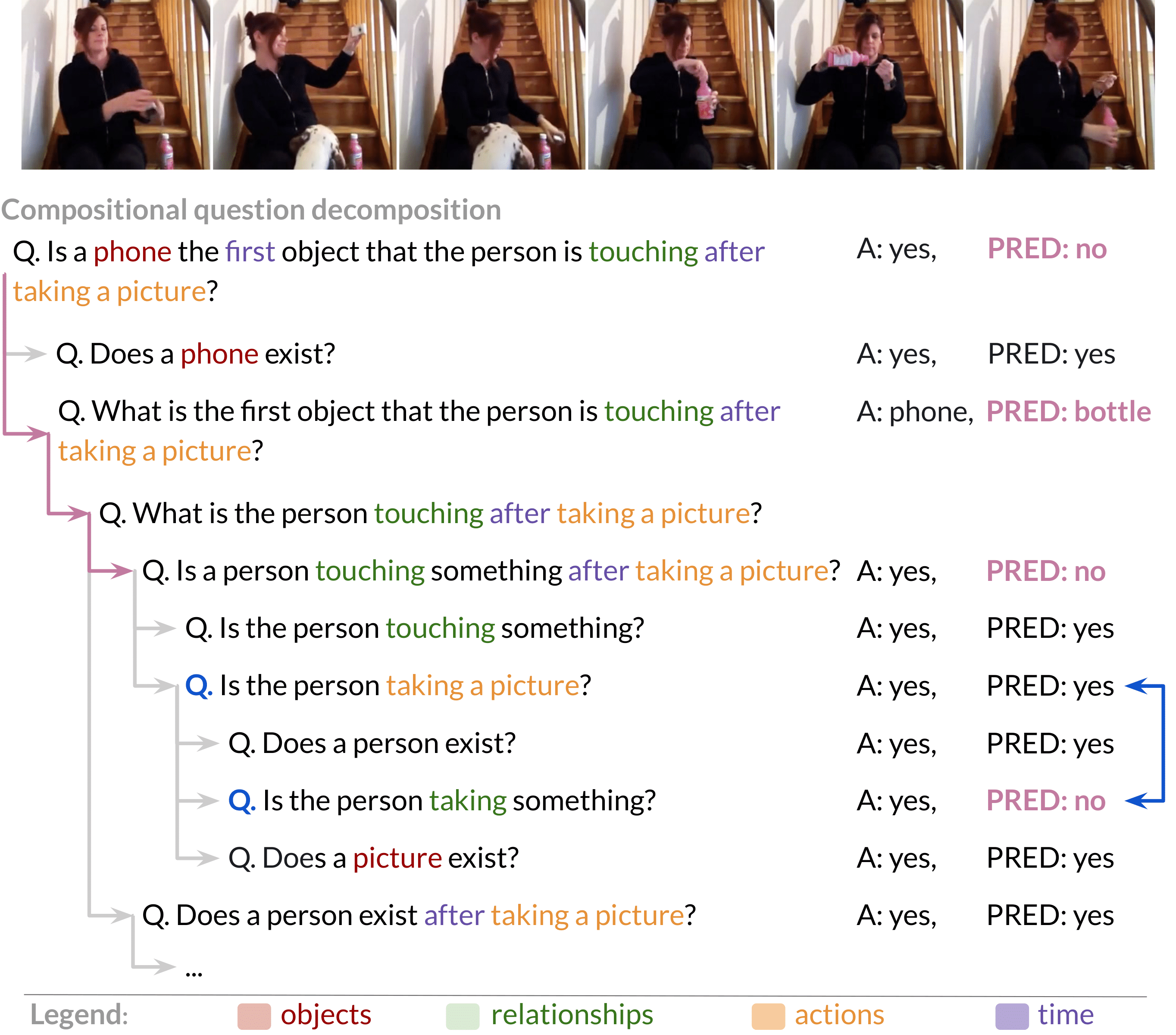

(i) leveraging human-like intuitions, such as learning compositionally, to boost the robustness of models and

(ii) building interpretable models such that we can use correctability methods to make sure

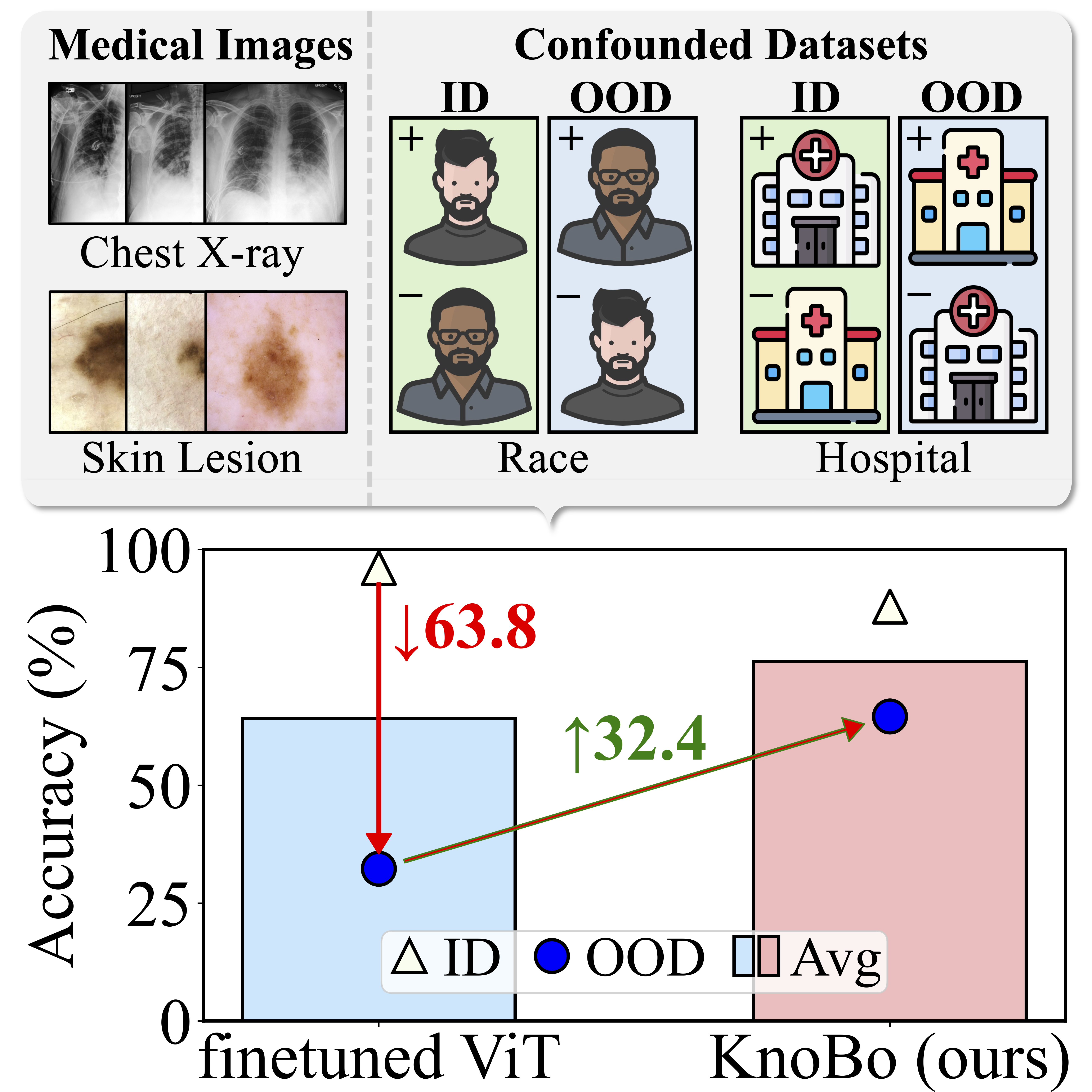

they don't rely on biases

and thereby facilitating reliability and robustness.